![]()

With Orange Pi 5's NPU for Lidar Modeling & Object Pascal

2025.10.10 14:09

Q. How to use a premade model with Object Pascal?

That's an excellent and practical question. Integrating pre-trained models (like those based on PointNet or a simple image-based CNN) into a niche language like Object Pascal is one of the biggest challenges in deploying robotics code.

Since Object Pascal (e.g., in Lazarus or Free Pascal) doesn't have native, high-level deep learning libraries like Python's TensorFlow or PyTorch, you have two primary methods:

1. Using a C/C++ Library Wrapper (The Recommended Way)

The most robust and common approach is to treat the deep learning model as an external service implemented in a more suitable language and link to it.

A. Compile the Model to a Shared Library (.dll or .so)

-

Export the Model: Use a framework like ONNX (Open Neural Network Exchange). All major deep learning frameworks (PyTorch, TensorFlow) can export their models to the ONNX format.

-

Use an Inference Engine: Use a dedicated inference engine library written in C/C++ (like ONNX Runtime, OpenVINO, or TensorFlow Lite) to load the ONNX model.

-

Create a Wrapper: Write a simple C/C++ wrapper library (a

.dllfor Windows or a.sofor Linux) that has functions likeInitializeModel(),ProcessLidarData(input_array), andGetOutput().

B. Link to Object Pascal

Your Object Pascal code then only needs to call these exported functions.

That's an excellent and practical question. Integrating pre-trained models (like those based on PointNet or a simple image-based CNN) into a niche language like Object Pascal is one of the biggest challenges in deploying robotics code.

Since Object Pascal (e.g., in Lazarus or Free Pascal) doesn't have native, high-level deep learning libraries like Python's TensorFlow or PyTorch, you have two primary methods:

1. Using a C/C++ Library Wrapper (The Recommended Way)

The most robust and common approach is to treat the deep learning model as an external service implemented in a more suitable language and link to it.

A. Compile the Model to a Shared Library (.dll or .so)

-

Export the Model: Use a framework like ONNX (Open Neural Network Exchange). All major deep learning frameworks (PyTorch, TensorFlow) can export their models to the ONNX format.

-

Use an Inference Engine: Use a dedicated inference engine library written in C/C++ (like ONNX Runtime, OpenVINO, or TensorFlow Lite) to load the ONNX model.

-

Create a Wrapper: Write a simple C/C++ wrapper library (a

.dllfor Windows or a.sofor Linux) that has functions likeInitializeModel(),ProcessLidarData(input_array), andGetOutput().

B. Link to Object Pascal

Your Object Pascal code then only needs to call these exported functions.

This keeps your fast, real-time Object Pascal core intact while outsourcing the heavy computation to the optimized C/C++ library.

2. Using a Native Object Pascal NN Library (The Simpler Way)

If your model is small and simple (e.g., a basic, shallow feed-forward network), you might find a native Object Pascal library.

-

PANN (Pascal Artificial Neural Network): This is one of the more developed libraries for Object Pascal/Free Pascal. It focuses on basic neural networks and is suitable for simple tasks like classification.

-

Math Libraries: You would load the network's weights and biases (which are just large matrices of numbers) and then use Pascal's own matrix math libraries to manually perform the forward pass (matrix multiplication and activation function).

Limitation: This method is generally not feasible for large, modern architectures like PointNet or complex CNNs due to the lack of optimized matrix algebra and GPU acceleration in native Object Pascal deep learning tools.

Recommendation: Focus on Method 1 (C/C++ Wrapper) for any serious robotics or perception work.

=================================================================================

Q. It will be running on Orange Pi 5 which has NPU. Therefore, it should be ONNX model to convert to krnn model first.

That is the perfect piece of information for planning your deployment! The Orange Pi 5's NPU (Neural Processing Unit) dramatically changes the strategy for integrating a model like PointNet, making it feasible to run complex deep learning models in real-time.

You are correct: the standard pathway for utilizing a Rockchip NPU (like the one in the Orange Pi 5) is to convert your trained ONNX model into the format the NPU understands, which typically involves the Rockchip's specialized toolchain. The model format often generated is for the RKNPU (Rockchip NPU).

Here is the precise, step-by-step process you will need to follow, replacing the generic C/C++ wrapper with the Rockchip-specific toolchain:

1. The Model Conversion Pipeline

This process happens on your development PC and prepares the model for the Orange Pi 5:

-

Train the PointNet Model:

-

Use PyTorch or TensorFlow (in Python) to train your 2D PointNet model on your Lidar data.

-

Save the trained model's weights.

-

-

Export to ONNX:

-

Convert the trained model from its native format (e.g., PyTorch

.pth) to the intermediate ONNX format. This is the universal standard.

-

-

Convert to RKNPU Model:

-

You must use the Rockchip toolchain (e.g., RKNN Toolkit or RKNN SDK). This Python-based toolkit is specifically designed to take the ONNX file and convert it into the highly optimized proprietary format (

.rknnor similar) required by the NPU. -

This step includes quantization, which dramatically reduces the model size and power consumption, making it ideal for the Orange Pi 5.

-

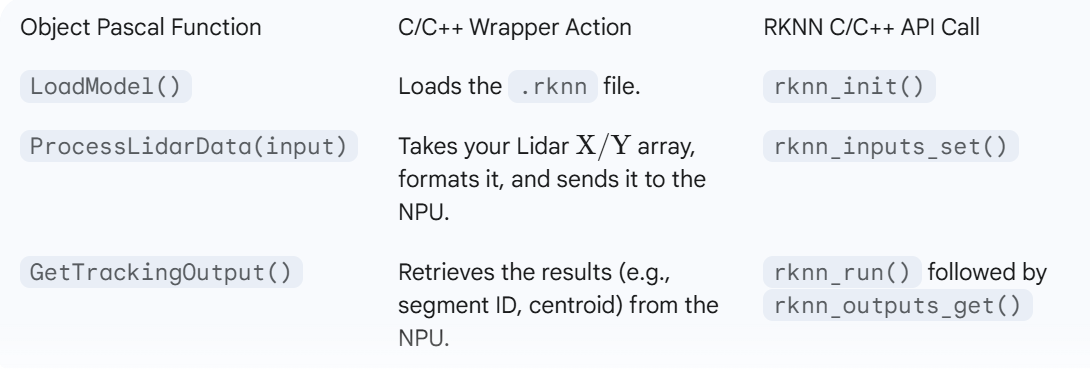

2. Object Pascal Integration on the Orange Pi 5

Once the .rknn model is generated, you shift to the Orange Pi 5 environment for integration with your Object Pascal code:

-

Use the C/C++ Inference Library:

-

The Rockchip SDK provides a C/C++ library for NPU inference (often called the RKNN C/C++ API). This is the key library that runs the

.rknnmodel on the NPU.

-

-

Create the Object Pascal Wrapper (DLL/SO):

-

This is the final bridge between your core Object Pascal program and the NPU hardware.

-

Write a simple C/C++ library (a

.sofile on Linux) that includes the Rockchip NPU header files and exposes functions that Object Pascal can call.

-

In summary, the NPU makes this project scalable and fast. The key is using the specialized Rockchip RKNN Toolkit to convert your PointNet model, allowing your existing Object Pascal application to offload the hard work to the NPU.

=============================================================================

Q. How to create a wrapper .so file to be used with Object Pascal?

Creating a wrapper .so file (Shared Object, the Linux equivalent of a DLL) to be used with Object Pascal involves three main steps: C/C++ Code, Compilation, and Pascal Linking.

Since your target is the Orange Pi 5 with the RKNN NPU API, the wrapper must be written in C/C++ to interface with that SDK.

Here is a step-by-step guide focusing on the necessary code and structure:

1. Create the C/C++ Wrapper Code

You will create a C/C++ source file (e.g., rknn_wrapper.cpp) that contains all the logic to load the model, process the data, and run the NPU inference. The key is using the extern "C" declaration to prevent C++ name mangling, ensuring Object Pascal can find the functions by their exact name.

Example C/C++ Code (rknn_wrapper.cpp)

#include <iostream>

// Include the RKNN NPU API headers here

// #include <rknn_api.h>

// Define a simple struct for Lidar point data that matches your Pascal TPoint

// NOTE: Use fixed-size types (float, int) for compatibility.

typedef struct {

float x;

float y;

} PascalPoint;

// --- Function 1: Initialize and Load Model ---

// Returns 1 on success, 0 on failure.

extern "C" int InitializeRKNNModel(const char* model_path) {

// 1. Initialize RKNN context (rknn_context)

// 2. Load the .rknn model file from model_path

// 3. Set input/output parameters

// Placeholder for actual RKNN calls

std::cout << "RKNN Model Initialized: " << model_path << std::endl;

// return rknn_init(...) == 0; // Actual success check

return 1;

}

// --- Function 2: Run Inference ---

// Takes input data and returns a pointer to the output data structure.

// NOTE: Returns a pointer (PascalPointer) to dynamically allocated memory.

extern "C" float* RunInference(PascalPoint* input_points, int num_points) {

// 1. Format the input_points array for RKNN

// 2. Call rknn_inputs_set()

// 3. Call rknn_run() to execute on the NPU

// 4. Call rknn_outputs_get() to retrieve the results

// Example: Allocate space for the output (e.g., 5 segments, each with 2 floats for centroid)

int output_size = 5 * 2;

float* output_data = new float[output_size];

// Populate output_data with results (e.g., predicted centroids/segments)

output_data[0] = 100.0; // X of first predicted centroid

output_data[1] = 200.0; // Y of first predicted centroid

return output_data; // This pointer is passed back to Pascal

}

// --- Function 3: Clean Up Memory ---

// Releases the memory allocated by RunInference.

extern "C" void FreeOutput(float* ptr) {

delete[] ptr;

}

// --- Function 4: Clean Up Model ---

extern "C" void ReleaseModel() {

// Call rknn_release() to free the NPU context

std::cout << "RKNN Model Released." << std::endl;

}

2. Compile the Shared Object (.so)

You must compile the C/C++ file on your Orange Pi 5 (or a cross-compilation environment targeting ARM Linux) and link it against the RKNN NPU API libraries.

Compilation Command (Linux/Orange Pi 5)

Assuming you are using the g++ compiler:

# -shared creates the .so file

# -fPIC is essential for position-independent code (required for shared libraries)

# -o specifies the output file name

# -l directs the linker to include the RKNN library (adjust name as needed)

g++ -shared -fPIC rknn_wrapper.cpp -o librknnwrapper.so -I/path/to/rknn/headers -L/path/to/rknn/libs -lrknn_api

This command produces librknnwrapper.so, which is the file your Pascal program will load.

3. Link and Call from Object Pascal

In your Object Pascal unit (e.g., in Free Pascal or Lazarus), you use the external keyword to declare the functions contained in the .so file.

Example Object Pascal Code

unit RknnInferenceWrapper;

interface

uses SysUtils;

// Define a type that EXACTLY matches the C/C++ struct

type

TPoint = record

X: Single; // Use Single (4 bytes) to match C/C++ float

Y: Single;

end;

PPascalPoint = ^TPoint; // Pointer to the input array

// Define the functions exactly as they appear in the C/C++ file

function InitializeRKNNModel(const model_path: PChar): Integer; cdecl; external 'librknnwrapper.so';

function RunInference(input_points: PPascalPoint; num_points: Integer): PSingle; cdecl; external 'librknnwrapper.so';

procedure FreeOutput(ptr: PSingle); cdecl; external 'librknnwrapper.so';

procedure ReleaseModel; cdecl; external 'librknnwrapper.so';

implementation

end.

// --- Example Usage in Your Main Program ---

// ...

var

LidarPoints: array[0..398] of TPoint;

PredictedOutput: PSingle;

begin

// 1. Initialize the model

if InitializeRKNNModel('/path/to/model.rknn') = 1 then

begin

// 2. Prepare Lidar data (fill LidarPoints array)

// ...

// 3. Run inference. The model will run on the NPU!

PredictedOutput := RunInference(@LidarPoints[0], Length(LidarPoints));

if PredictedOutput <> nil then

begin

// 4. Access the results

Writeln('Predicted Centroid X: ', PredictedOutput^); // First element (X)

Writeln('Predicted Centroid Y: ', (PredictedOutput + 1)^); // Second element (Y)

// 5. Clean up memory

FreeOutput(PredictedOutput);

end;

end;

// 6. Clean up model

ReleaseModel;

end;

Key Points for Compatibility:

-

Calling Convention: Always use

cdeclin Object Pascal. -

Data Types: Use fixed-size types:

Single(4 bytes) for C/C++float,Integer(usually 4 bytes) for C/C++int, andPCharfor C/C++const char*. -

Pointers and Memory: When a C/C++ function allocates memory (like

new float[]), your Object Pascal code receives a pointer. You must call a corresponding C/C++ cleanup function (FreeOutput) to prevent memory leaks.