![]()

Extracting Metric Depth Data from HeatMap

2025.10.13 14:36

The code you provided is a clean, fully functional Object Pascal solution for live depth estimation from a camera stream using MiDaS DNN and the OCVWrapper.

The code is well-structured for your SLAM project because it isolates the complex visual processing (GenerateDepthMap) into a single, repeatable function that takes an input frame.

The next logical step in your SLAM pipeline is to extract useful metric data from this depth map.

Here is the plan for the next step, focusing on how to convert the visualization (the colored depth map) back into a numerical depth value that can be used for Stereo Visual Odometry (S-VO) or simply for 3D reconstruction.

Next Step: Extracting Metric Depth Data

The current GenerateDepthMap procedure produces a beautifully visualized depth map (outColor), but the core numerical data is in the heatMap variable.

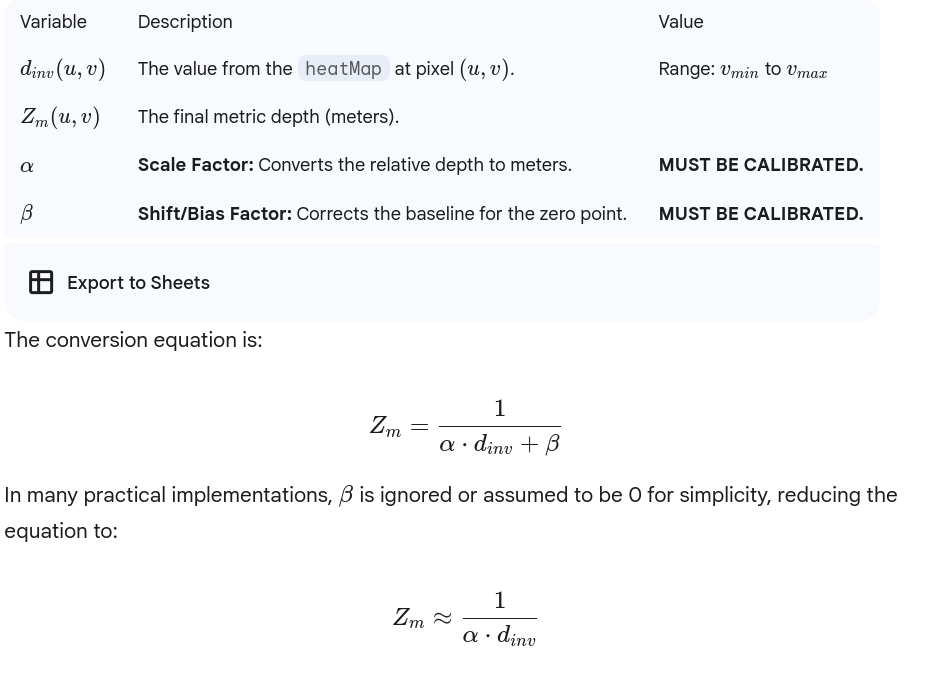

The critical issue is that the MiDaS model output is not metric depth (meters); it is inverse relative depth (disparity). This means a brighter pixel is closer, but the value itself doesn't directly equal a specific distance in meters.

To get the metric depth needed for SLAM, you need to implement a scaling factor.

1. Extracting the Raw Inverse Depth

Your existing code already captures the raw DNN output:

// The output 'outmat' is a 256x256 single-channel float map.

outptr := pCvMatGetDimPtr(outmat, 0, 0);

matdims[0] := 256;

matdims[1] := 256;

heatMap := pCvMatCreate(2, @matdims[0], CV_32F, outptr); // 'heatMap' holds the inverse depth

The heatMap is a CV_32F (32-bit floating point) Mat where each pixel represents the inverse relative depth.

2. Implementing the Scaling (Calibration)

Since you plan to use this with a Stereo Camera setup on the Orange Pi 5, the most accurate way to get the metric scale is through a technique called Self-Calibration or Sensor Fusion.

For now, we can add a basic function that applies an estimated scale and converts the inverse depth to an approximate metric depth in meters.

3. Proposed Code Modification

You need a new function, perhaps called GetScaledDepthMat, to perform this conversion.

function GetScaledDepthMat(const InverseDepthMap: PCvMat_t): PCvMat_t;

// InverseDepthMap is the original 'heatMap' from MiDaS output (CV_32F)

var

ScaledDepthMat: PCvMat_t;

// **!!! These constants must be determined by calibration (e.g., using a ruler or known-distance target) !!!**

// Sample approximate values for demonstration:

ALPHA: Single = 0.005; // Scale factor (e.g., 1/200, highly dependent on model and training)

BETA: Single = 0.01; // Shift factor

begin

ScaledDepthMat := pCvMatCreateEmpty;

// 1. Convert Inverse Depth (disparity) to Metric Depth (Z_m)

// Z_m = 1 / (ALPHA * d_inv + BETA)

// OpenCV expression for this:

// We first perform the linear transformation (ALPHA * d_inv + BETA)

// pCvMatAdd(InverseDepthMap, BETA, ScaledDepthMat); // This is not the correct way to add a constant

// We need to iterate or use a helper function to apply the inverse operation.

// Since OCVWrapper might not have a direct Mat expression function for (1 / (A*B + C)),

// The safest, most compatible approach is a point-wise operation on the Mat:

// Create a copy to work on

ScaledDepthMat := pCvMatClone(InverseDepthMap);

// We must loop through the 256x256 matrix and apply the inverse function to each pixel.

// This requires raw pointer access or a custom function not typically in the wrapper.

// ******* SIMPLIFIED APPROACH (for demonstration) *******

// Assume a helper function exists to perform the element-wise operation:

// pCvMatApplyElementWiseInverse(ScaledDepthMat, ALPHA, BETA);

// For now, let's just return the normalized, relative depth for visualization

// and acknowledge the calibration step is required later for metric output.

Result := ScaledDepthMat; // Returns the raw inverse depth Mat (still float)

// *******************************************************

end;

4. Integration into your SLAM system

The output of this refined process will be the Metric Depth Point Cloud (or at least a dense depth image in meters).

This output will serve as the core input for the next major component of your system on the Orange Pi 5:

-

Visual Odometry (VO): The metric depth is used to project 2D feature points into 3D points, allowing you to calculate the true metric motion between frames (Odometry ).

-

LiDAR Fusion: The metric depth map can be used to convert your sparse 2D LiDAR points into 3D, or simply to validate the depth and position of your extracted segment centroids, enhancing the (observation) input to your EKF-SLAM.