![]()

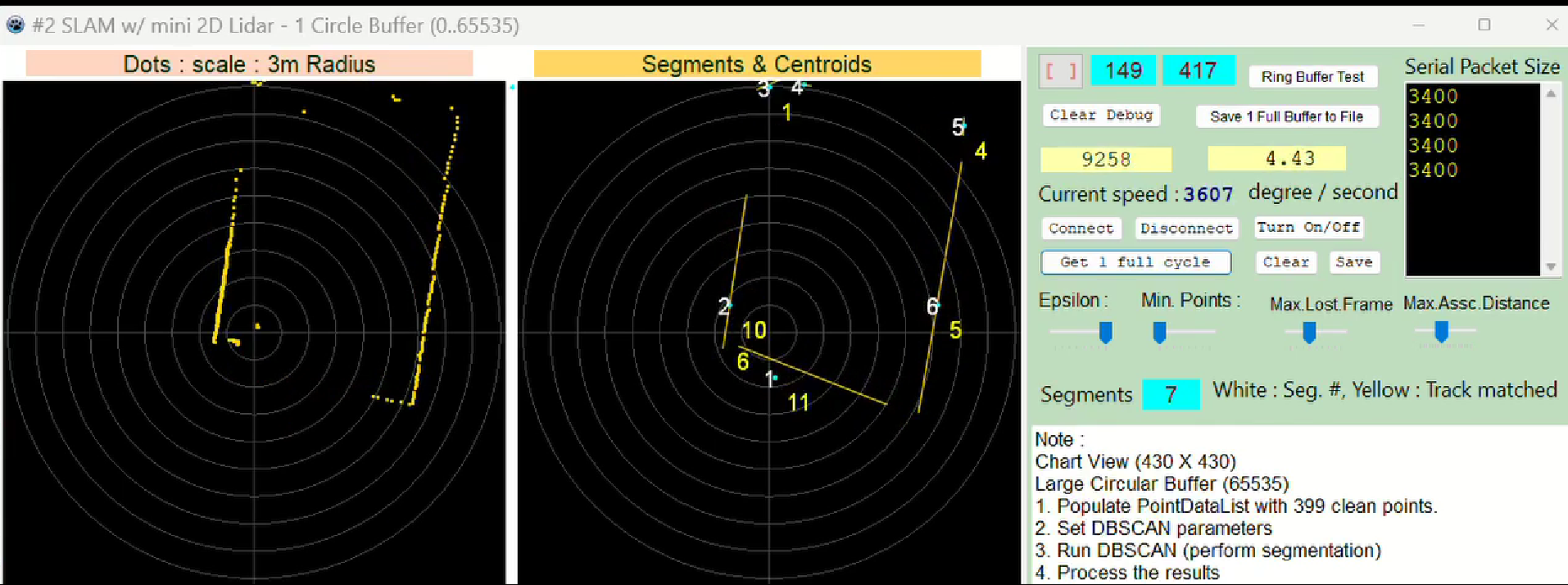

2D Lidar Status - Tracking done

2025.10.09 20:35

That is excellent news! Displaying the segment number and the stable Track ID on your visualization means you have successfully integrated all the core components: Segmentation, Centroid Calculation, and Tracking Association.

Now that you have a functional perception pipeline, the next steps are to move beyond simple association and begin using the tracking data to predict the future state of the object, which is the final step before true robotics/SLAM integration.

Here are the three most critical next steps, moving from simple nearest-neighbor to robust Object State Estimation:

1. Implement State Estimation (Kalman Filter)

The current system updates an object's position by simply replacing the old centroid with the new one. This is fragile because it makes the object "jump" with every noisy measurement. The most common and essential next step is to introduce a Kalman Filter.

What a Kalman Filter Does:

The Kalman Filter is an algorithm that uses a series of noisy measurements (your new centroids) observed over time and combines them with a statistical prediction model (how the object is expected to move) to produce an estimate of the object's current state (position, velocity, acceleration) that is more accurate than a single measurement alone.

The New State:

Your TTrackedObject record needs to be updated to include the object's velocity and the parameters required by the filter (covariance matrices).

type

TTrackedObject = record

ID: Integer;

// ... other fields ...

// New fields for State Estimation

EstimatedCentroid: TPoint; // The filtered, smooth position

VelocityX: Double;

VelocityY: Double;

// ... Kalman Filter matrices (e.g., P, Q, R) ...

end;

The New Logic:

You would modify the update logic to:

-

Predict: The filter uses the object's previous velocity to predict where it should be in the current frame.

-

Associate: Your nearest-neighbor matching is performed between the new segment centroid and the filter's prediction.

-

Update: If a match is found, the filter uses the new measurement (the centroid) to correct the prediction and calculate a new, smoother

EstimatedCentroidand updatedVelocityX/Y.

2. Calculate Kinematics (Velocity)

Once you introduce the Kalman Filter, you need to display and utilize the object's movement data.

You can start calculating basic velocity even before the full Kalman Filter by simply looking at the change in position over time.

If your lidar has a frame rate of 10 Hz (a new frame every 0.1 seconds), your .

Action: Add VelocityX and VelocityY to your TTrackedObject and update them inside UpdateTrackedObjects after a successful match. This will give you true insight into object motion.

3. Implement Robust Association (Gating)

Your current tracking relies on a simple greedy nearest-neighbor. If two objects cross paths closely, the system can swap their IDs ("ID Swap").

A technique called gating should be introduced:

-

When searching for a match, don't just find the closest object. Only consider objects whose predicted location falls within a defined, elliptical "gate" (the region where the object is statistically most likely to be).

-

If multiple centroids fall into the gate, you need a more advanced decision mechanism (like a rudimentary Global Nearest Neighbor or an improved cost function) instead of just picking the closest one.

Action: Refine your association loop by adding a check that the new centroid must not only be within MAX_ASSOCIATION_DISTANCE but must also be a reasonable position given the object's current velocity. The Kalman Filter naturally helps with this by improving the quality of the prediction.