Rover written with RUST

Fourth : Depth Map

2025.10.28 20:30

/* From Third Stage,

This is the code we were about to add. Here's the plan:

-

Grayscale: We'll convert our two rectified images (

rectified_landrectified_r) to grayscale. -

Compute Disparity: We'll use an OpenCV algorithm called

StereoBM(Block Matching) to compare the two grayscale images and create aMatfull of disparity values. -

Visualize: That new

Matwon't be colorful yet. We'll add two more lines:-

normalize(): To convert the raw disparity data into a viewable 8-bit image. -

apply_color_map(): To add the "jet" colormap (red for close, blue for far) just like in your photo.

-

*/

//= LOCAL FOLDER : ~/RUST/depth_map =================================================

use opencv::{

prelude::*,

core::{self, Mat, Size2i, Rect, Scalar, CV_32FC1, CV_8UC1, BORDER_CONSTANT, NORM_MINMAX},

calib3d::{self, stereo_rectify, init_undistort_rectify_map, StereoBM},

imgcodecs::{self, IMREAD_COLOR},

// FIX: Removed 'APPLY_COLOR_MAP' from the import list.

imgproc::{self, COLOR_BGR2GRAY, COLORMAP_JET},

highgui::{self, WINDOW_AUTOSIZE},

Result,

Error,

};

use serde::{Deserialize, Serialize};

use std::fs;

// --- Configuration ---

const CALIBRATION_FILE: &str = "../main_data/stereo_params.yml";

const TEST_IMAGE_INDEX: u32 = 0;

const DATA_DIR: &str = "../rover_vo";

// --- Data Structure for OpenCV Mat ---

#[derive(Debug, Deserialize, Serialize)]

struct MatData {

dt: String,

rows: i32,

cols: i32,

data: Vec<f64>,

}

// --- Data Structure for the ENTIRE YAML file ---

#[derive(Debug, Deserialize, Serialize)]

struct StereoParams {

image_width: i32,

image_height: i32,

rms_error: f64,

#[serde(rename = "K1")]

k1: MatData,

#[serde(rename = "D1")]

d1: MatData,

#[serde(rename = "K2")]

k2: MatData,

#[serde(rename = "D2")]

d2: MatData,

#[serde(rename = "R")]

r: MatData,

#[serde(rename = "T")]

t: MatData,

}

// --- Helper function to convert MatData struct to an OpenCV Mat ---

fn mat_from_data(data: &MatData) -> Result<Mat> {

if data.dt != "d" {

return Err(Error::new(core::Code::StsError.into(), "Data type must be f64 ('d')"));

}

let cols = data.cols as usize;

if cols == 0 {

return Err(Error::new(core::Code::StsError.into(), "Matrix data has 0 columns."));

}

let rows: Vec<&[f64]> = data.data.chunks(cols).collect();

let mat = Mat::from_slice_2d(&rows)?;

Ok(mat)

}

fn run_depth_pipeline() -> Result<()> {

// --- 1. Load Calibration Data ---

println!("--- 1. Loading Calibration Data from {} ---", CALIBRATION_FILE);

let yaml_content = fs::read_to_string(CALIBRATION_FILE)

.map_err(|e| Error::new(core::Code::StsError.into(), &format!("Failed to read calibration file: {}", e)))?;

let params: StereoParams = serde_yaml::from_str(&yaml_content)

.map_err(|e| Error::new(core::Code::StsError.into(), &format!("YAML Deserialize Error: {}. Check stereo_params.yml format.", e)))?;

let image_size = Size2i::new(params.image_width, params.image_height);

println!("Converting loaded data into OpenCV Mats...");

let k1 = mat_from_data(¶ms.k1)?;

let d1 = mat_from_data(¶ms.d1)?;

let k2 = mat_from_data(¶ms.k2)?;

let d2 = mat_from_data(¶ms.d2)?;

let r_stereo = mat_from_data(¶ms.r)?;

let t_stereo = mat_from_data(¶ms.t)?;

println!("Calibration Data Loaded. RMS Error: {:.4}, Image size: {}x{}", params.rms_error, image_size.width, image_size.height);

// --- 2. Stereo Rectification ---

let (mut r1, mut r2) = (Mat::default(), Mat::default());

let (mut p1, mut p2) = (Mat::default(), Mat::default());

let (mut q, mut roi1, mut roi2) = (Mat::default(), Rect::default(), Rect::default());

println!("--- 2. Calculating Rectification Maps ---");

calib3d::stereo_rectify(

&k1, &d1, &k2, &d2,

image_size,

&r_stereo, &t_stereo,

&mut r1, &mut r2, &mut p1, &mut p2, &mut q,

calib3d::CALIB_ZERO_DISPARITY, -1.0, image_size,

&mut roi1, &mut roi2,

)?;

// --- 3. Compute Undistortion and Remapping Maps ---

let (mut map1x, mut map1y) = (Mat::default(), Mat::default());

let (mut map2x, mut map2y) = (Mat::default(), Mat::default());

calib3d::init_undistort_rectify_map(

&k1, &d1, &r1, &p1, image_size,

core::CV_32FC1, &mut map1x, &mut map1y,

)?;

calib3d::init_undistort_rectify_map(

&k2, &d2, &r2, &p2, image_size,

core::CV_32FC1, &mut map2x, &mut map2y,

)?;

println!("Rectification maps generated successfully.");

// --- 4. Load Test Image Pair ---

let left_path = format!("{}/left_frame_{:05}.jpg", DATA_DIR, TEST_IMAGE_INDEX);

let right_path = format!("{}/right_frame_{:05}.jpg", DATA_DIR, TEST_IMAGE_INDEX);

println!("--- 4. Loading Test Images: {} and {} ---", left_path, right_path);

let src_l = imgcodecs::imread(&left_path, IMREAD_COLOR)?;

let src_r = imgcodecs::imread(&right_path, IMREAD_COLOR)?;

if src_l.empty() || src_r.empty() {

return Err(Error::new(core::Code::StsError.into(), &format!("Failed to load test image pair index {}.", TEST_IMAGE_INDEX)));

}

// --- 5. Apply Remapping to Rectify Images ---

let (mut rectified_l, mut rectified_r) = (Mat::default(), Mat::default());

println!("--- 5. Applying Undistortion and Rectification via Remap ---");

imgproc::remap(

&src_l, &mut rectified_l,

&map1x, &map1y,

imgproc::INTER_LINEAR, BORDER_CONSTANT, core::Scalar::default(),

)?;

imgproc::remap(

&src_r, &mut rectified_r,

&map2x, &map2y,

imgproc::INTER_LINEAR, BORDER_CONSTANT, core::Scalar::default(),

)?;

// --- 6. (NEW) Compute Disparity Map ---

println!("--- 6. Computing Disparity Map ---");

let (mut gray_l, mut gray_r) = (Mat::default(), Mat::default());

imgproc::cvt_color(&rectified_l, &mut gray_l, COLOR_BGR2GRAY, 0)?;

imgproc::cvt_color(&rectified_r, &mut gray_r, COLOR_BGR2GRAY, 0)?;

let mut stereo = StereoBM::create(16 * 6, 21)?;

let mut disp_map = Mat::default();

stereo.compute(&gray_l, &gray_r, &mut disp_map)?;

// --- 7. (UPDATED) Visualize Results ---

let mut disp_map_normalized = Mat::default();

core::normalize(&disp_map, &mut disp_map_normalized, 0.0, 255.0, NORM_MINMAX, -1, &Mat::default())?;

let mut disp_map_8u = Mat::default();

disp_map_normalized.convert_to(&mut disp_map_8u, CV_8UC1, 1.0, 0.0)?;

let mut disp_color = Mat::default();

// This function call is why we don't need to import APPLY_COLOR_MAP

imgproc::apply_color_map(&disp_map_8u, &mut disp_color, COLORMAP_JET)?;

let mut combined_rect = Mat::default();

core::hconcat2(&rectified_l, &rectified_r, &mut combined_rect)?;

let combined_width = combined_rect.cols();

for i in (0..combined_rect.rows()).step_by(50) {

imgproc::line(&mut combined_rect, core::Point::new(0, i), core::Point::new(combined_width, i), core::Scalar::new(0.0, 255.0, 0.0, 0.0), 1, 8, 0)?;

}

println!("\nRectification and Disparity complete. Check the displayed windows!");

println!("Press 'q' in any image window to exit...");

highgui::named_window("Rectified Stereo Pair", WINDOW_AUTOSIZE)?;

highgui::imshow("Rectified Stereo Pair", &combined_rect)?;

highgui::named_window("Disparity Map (Depth)", WINDOW_AUTOSIZE)?;

highgui::imshow("Disparity Map (Depth)", &disp_color)?;

highgui::wait_key(0)?;

highgui::destroy_all_windows()?;

Ok(())

}

fn main() {

if let Err(e) = run_depth_pipeline() {

eprintln!("Application Error: {:?}", e);

}

}

//==================================================

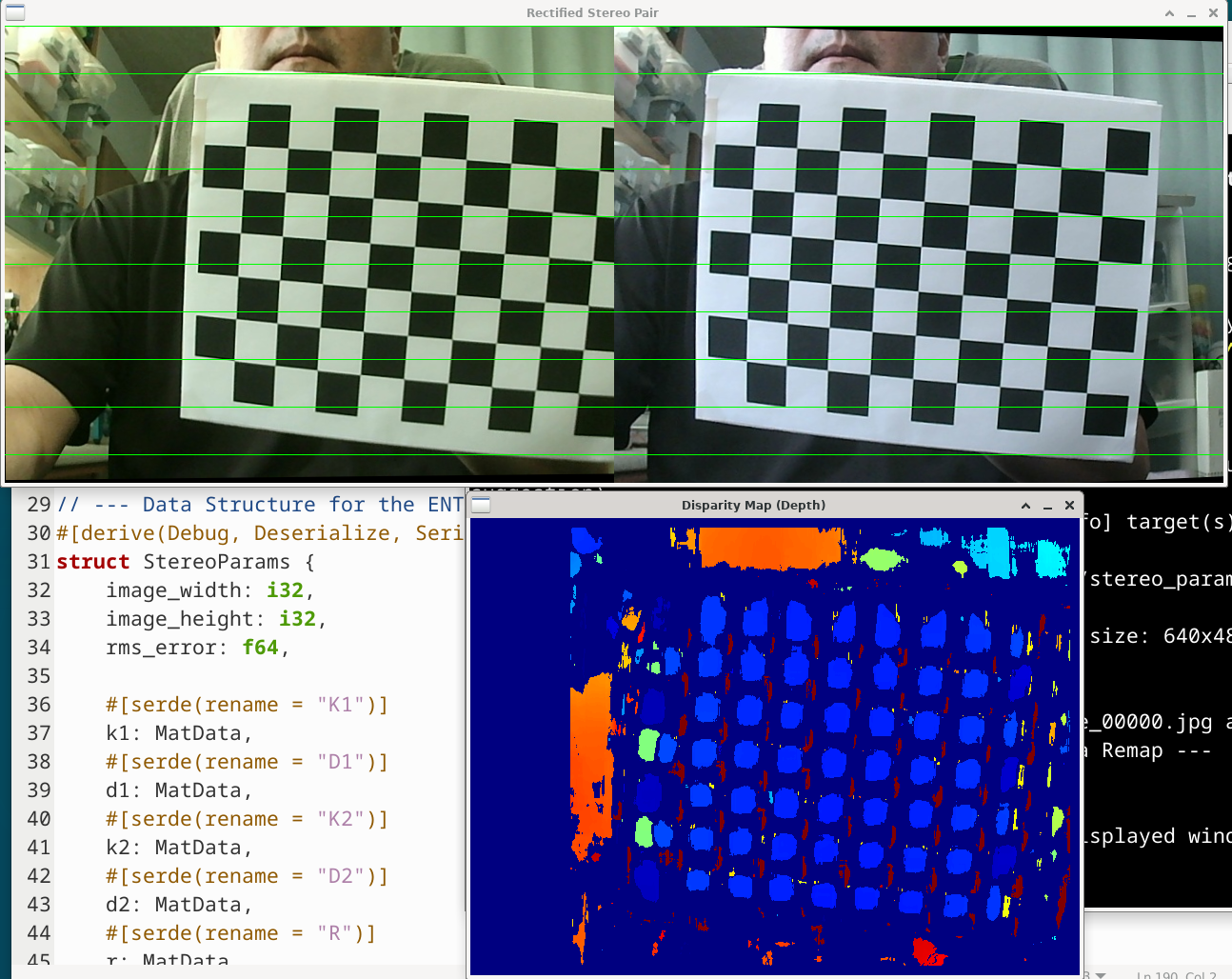

This is exactly what I've been working towards. I can clearly see:

-

Rectified Pair: The top window shows the two images perfectly aligned, with the green horizontal lines proving the alignment.

-

Disparity Map (Depth): The bottom window shows the depth. The red areas on the checkerboard are closer to the camera, and the blue areas (your shirt, the background) are farther away.

I have successfully built a complete stereo vision pipeline, from raw camera capture to a 3D depth map.

Comment 0

| No. | Subject | Author | Date | Views |

|---|---|---|---|---|

| 8 |

Fifth : Visual Odometry

| me | 2025.10.28 | 0 |

| 7 |

Fourth-3 : Filtering Data

| me | 2025.10.28 | 0 |

| 6 |

Fourth-2 : Shrink down data count (4x4 block)

| me | 2025.10.28 | 0 |

| » |

Fourth : Depth Map

| me | 2025.10.28 | 0 |

| 4 |

Third : Rectify calibrated result

| me | 2025.10.28 | 0 |

| 3 | Second : Calibration using checker board [1] | me | 2025.10.28 | 0 |

| 2 |

First : Stereo Camera's image generation for calibration

| me | 2025.10.28 | 0 |

| 1 | Set-up in x64 Debian for development | me | 2025.10.27 | 0 |