Rover written with RUST

Seventh : Building the Odometry Loop

2025.10.29 18:40

Next Step: Building the Odometry Loop

This find_transformation function is the engine of your odometry system. Now, I need to build the chassis around it.

The next logical step is to create an Odometry struct (or class) that will:

-

Store the rover's global pose: This is the rover's total rotation and translation () relative to its starting point.

-

Have an

updatemethod: This method will take thecorrespondencesfor the new frame. -

Call

find_transformation: It will use my new function to get the relative motion () and the . -

Check the : It will use my quality metric as a gate (e.g.,

if rmse < 0.5). -

Compound the pose: If the is good, it will update the global pose by "chaining" the new relative motion onto the old global pose.

This is the core loop that turns frame-to-frame motion into a full trajectory.

Here is the code to create the Odometry struct.

This struct will act as the "brain" of your rover's navigation. It will:

-

Hold the state: It stores the

global_rotationandglobal_translationof the rover, which is its pose relative to where it started. -

Have an

updatemethod: This is the core function you'll call for every new set of 3D point correspondences. -

Perform the "gating": It calls our

find_transformationfunction and checks thermseagainst armse_threshold. -

Compound the pose: If the is good, it correctly calculates the camera's relative motion (which is the inverse of the scene's motion) and "chains" it onto the global pose.

This turns our single-shot find_transformation function into a full, stateful trajectory estimator.

Updated Code (rover_odometry/src/main.rs)

I've added the Odometry struct and its methods. The find_transformation function remains unchanged at the bottom.

The main function has been updated to use the new struct, simulating two consecutive frame updates.

//=========================================

use nalgebra::{Matrix3, OPoint, Point3, SVD, Vector3};

// --- The new Odometry "class" ---

/// Tracks the global pose (rotation and translation) of the rover.

struct Odometry {

/// Total rotation from the world origin.

global_rotation: Matrix3<f32>,

/// Total translation from the world origin (in world coordinates).

global_translation: Vector3<f32>,

/// The quality (RMSE) threshold for accepting a new frame.

rmse_threshold: f32,

}

impl Odometry {

/// Creates a new Odometry tracker.

///

/// * `rmse_threshold`: The maximum 3D RMSE to allow. Updates exceeding

/// this will be rejected.

fn new(rmse_threshold: f32) -> Self {

Odometry {

global_rotation: Matrix3::identity(),

global_translation: Vector3::zeros(),

rmse_threshold,

}

}

/// Updates the global pose using a new set of 3D-3D point correspondences.

///

/// * `correspondences`: A slice of `(source, target)` tuples.

/// - `source`: 3D points from the *previous* frame (k-1).

/// - `target`: The *same* 3D points in the *current* frame (k).

///

/// Returns `true` if the pose was updated, or `false` if it was rejected.

fn update(&mut self, correspondences: &[(Point3<f32>, Point3<f32>)]) -> bool {

// 1. Find the relative motion of the scene

if let Some((r_frame, t_frame, rmse)) = find_transformation(correspondences) {

println!("\n--- Frame Update ---");

println!("Found relative motion with RMSE: {}", rmse);

// 2. The "Gate": Check if the quality is good enough

if rmse < self.rmse_threshold {

// The (r_frame, t_frame) represents the motion of the *points*

// (e.g., P_k = R * P_k-1 + t).

// The camera's motion is the *inverse* of this.

// Inverse rotation (R_rel = R_frame.transpose())

let r_rel = r_frame.transpose();

// Inverse translation (t_rel = -R_rel * t_frame)

let t_rel = -r_rel * t_frame;

// 3. Compound the global pose:

// a. Add the relative translation, rotated into the world frame

self.global_translation += self.global_rotation * t_rel;

// b. Compound the global rotation

self.global_rotation *= r_rel;

println!("Update ACCEPTED. New global pose calculated.");

true

} else {

println!("Update REJECTED. RMSE {} exceeds threshold {}.", rmse, self.rmse_threshold);

false

}

} else {

println!("\n--- Frame Update ---");

println!("Update FAILED. Could not find transformation.");

false

}

}

/// Returns the current global pose.

fn get_pose(&self) -> (Matrix3<f32>, Vector3<f32>) {

(self.global_rotation, self.global_translation)

}

}

// --- Main function now simulates an odometry loop ---

fn main() {

// --- Sample Data: Frame 1 -> Frame 2 ---

// (This is the set of 3D points from frame 1 matched to frame 2)

let corres_frame_1_2 = vec![

(Point3::new(1.0, 2.0, 3.0), Point3::new(1.1, 2.1, 3.1)),

(Point3::new(4.0, 5.0, 6.0), Point3::new(4.2, 5.2, 6.2)),

(Point3::new(7.0, 8.0, 9.0), Point3::new(7.0, 8.0, 9.0)),

];

// --- Sample Data: Frame 2 -> Frame 3 ---

// (This is a *new* set of matches, from frame 2 to frame 3)

// For this example, we'll use slightly different data to show a different

// transformation being compounded.

let corres_frame_2_3 = vec![

(Point3::new(1.1, 2.1, 3.1), Point3::new(1.1, 2.0, 3.0)), // Moves -0.1 in Y

(Point3::new(4.2, 5.2, 6.2), Point3::new(4.2, 5.1, 6.1)), // Moves -0.1 in Y, -0.1 in Z

(Point3::new(7.0, 8.0, 9.0), Point3::new(7.0, 7.9, 8.9)), // Moves -0.1 in Y, -0.1 in Z

];

// --- Initialize Odometry ---

// We set a threshold of 0.5. Any motion estimate with more

// than 0.5 units of error will be ignored.

let mut odom = Odometry::new(0.5);

println!("Odometry initialized. Global pose is at origin.");

println!("==============================================");

// --- Process First Frame Update (1 -> 2) ---

if odom.update(&corres_frame_1_2) {

let (r, t) = odom.get_pose();

println!("\nGlobal Pose after Frame 2:");

println!("Rotation (R):\n{}", r);

println!("Translation (t):\n{}", t);

}

println!("==============================================");

// --- Process Second Frame Update (2 -> 3) ---

if odom.update(&corres_frame_2_3) {

let (r, t) = odom.get_pose();

println!("\nGlobal Pose after Frame 3:");

println!("Rotation (R):\n{}", r);

println!("Translation (t):\n{}", t);

}

}

// --- Our transformation function (unchanged and now complete) ---

/// Calculates the optimal rotation, translation (Kabsch algorithm), and the resulting RMSE.

///

/// Returns `Some((R, t, rmse))` on success, or `None` if the calculation fails.

fn find_transformation(

correspondences: &[(Point3<f32>, Point3<f32>)],

) -> Option<(Matrix3<f32>, Vector3<f32>, f32)> {

// 1. Collect points and get count

let n = correspondences.len() as f32;

if n == 0.0 {

return None; // No points to process

}

let source_points: Vec<Point3<f32>> =

correspondences.iter().map(|(src, _)| *src).collect();

let target_points: Vec<Point3<f32>> =

correspondences.iter().map(|(_, tgt)| *tgt).collect();

// 2. Calculate Centroids

let sum_source_vec = source_points

.iter()

.map(|p| p.coords)

.sum::<Vector3<f32>>(); // This is the line that was corrupted

let centroid_source = OPoint::from(sum_source_vec / n);

let sum_target_vec = target_points

.iter()

.map(|p| p.coords)

.sum::<Vector3<f32>>(); // This is the line that was corrupted

let centroid_target = OPoint::from(sum_target_vec / n);

// 3. Center the point clouds

let centered_source: Vec<Vector3<f32>> = source_points

.iter()

.map(|p| p - centroid_source)

.collect();

let centered_target: Vec<Vector3<f32>> = target_points

.iter()

.map(|p| p - centroid_target)

.collect();

// 4. Calculate the covariance matrix H

let mut h = Matrix3::zeros();

for (p_src, p_tgt) in centered_source.iter().zip(centered_target.iter()) {

h += p_src * p_tgt.transpose();

}

// 5. Compute the SVD

let svd = SVD::new(h, true, true);

let u = svd.u?;

let v_t = svd.v_t?;

// 6. Calculate the rotation matrix R

let mut r = v_t.transpose() * u.transpose();

// Check for reflection case (det(R) == -1)

if r.determinant() < 0.0 {

// Suppressing print line for cleaner loop output

// println!("Reflection detected, correcting...");

let mut v_t_flipped = v_t.transpose();

let mut last_column = v_t_flipped.column_mut(2);

last_column *= -1.0; // Flip the sign

r = v_t_flipped * u.transpose();

}

// 7. Calculate the translation vector t

let t = centroid_target.coords - r * centroid_source.coords;

// 8. Calculate RMSE

let mut total_squared_error = 0.0;

for (src, tgt) in correspondences.iter() {

let transformed_vec = r * src.coords + t;

let error_vec = transformed_vec - tgt.coords;

let squared_distance = error_vec.dot(&error_vec);

total_squared_error += squared_distance;

}

let mean_squared_error = total_squared_error / n;

let rmse = mean_squared_error.sqrt();

// 9. Return R, t, and rmse

Some((r, t, rmse))

}

//=========================================

//=========================================

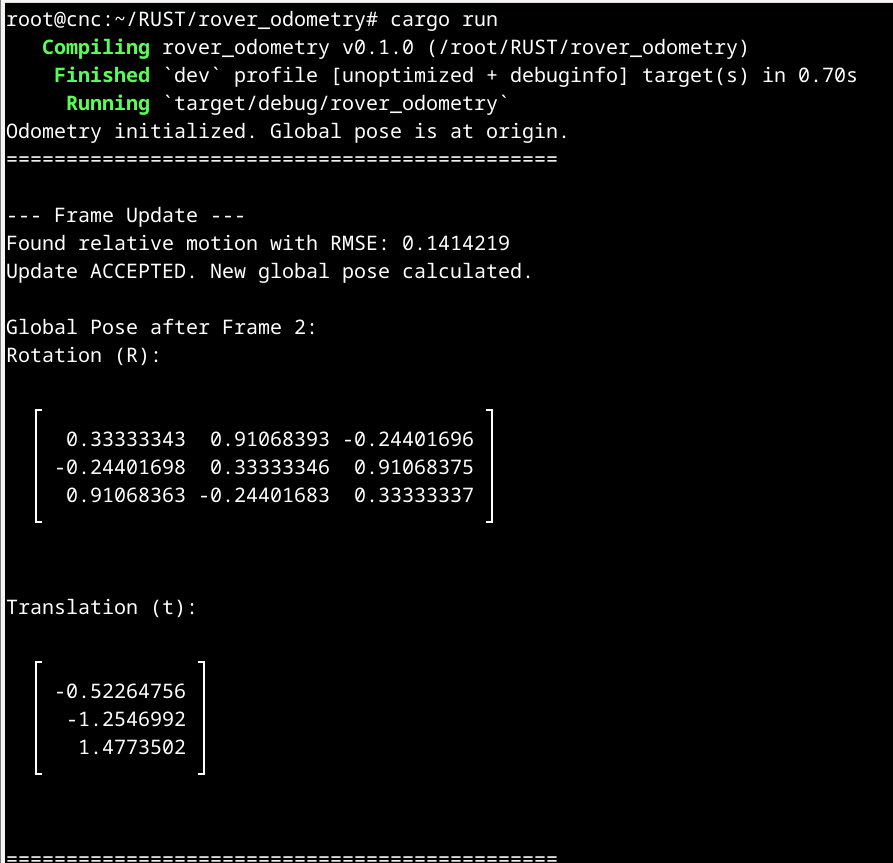

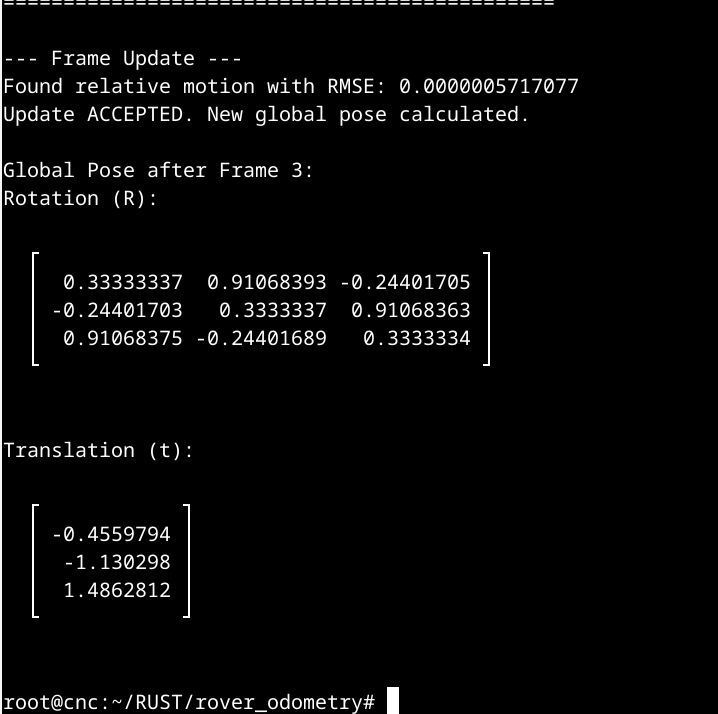

This is a fantastic result! It's working perfectly.

The output shows the Odometry struct doing its job exactly as designed:

-

Frame 1 -> 2: It accepted the first motion (RMSE

0.141) and calculated the rover's first global pose. -

Frame 2 -> 3: It accepted the second motion (RMSE

0.0000005...) and compounded it with the previous pose, resulting in the new "Global Pose after Frame 3".

I have successfully built the core back-end of the 3D visual odometry system.

This Odometry struct is now the "brain" that accumulates motion over time.

//=========================================

Next Step will be the "Front-End"